Recently I have been working in a project in which the tester used a tool called Soap UI. SoapUI makes it easy to create xml based test for services. Being a big fan of Continuous Integration I thought it would be cool to include SoapUI in our CI build cycle. Here's what you need to do to make it working

1. Install Soap UI

You can get a free version here. Take notice of the location in which it installs. For me this was : "C:\Program Files (x86)\SmartBear\SoapUI-4.6.4". I put this in the app.config of my test project.

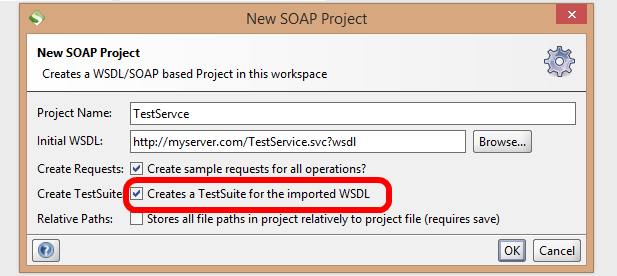

2. Create a SoapUI project.

Start up your SoapUI and go File->New Project. Point it to the wsdl of the service you want to test and let it create a testsuite.

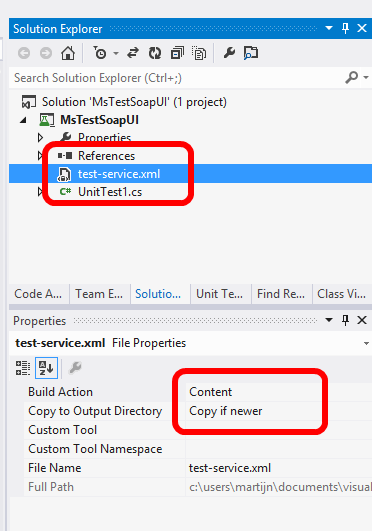

3. Add the Soap UI project to your MsTest project

In SoapUI right click save-project as on the project node. Save your xml file into a unit test project and include the xml in your project. Set Copy to Output Directory to "Copy if newer"

4. Running soap UI from MsTest

This is the tricky part. How can we get SoapUI to run our test from a UnitTest?? It turns out SoapUI has something called a Testrunner.bat which makes it relatively straightforward. Basically I create a testrunner proces in a unit test and redirect the output of SoapUI to the test output. Here's what the code looks like: (a zip of the project is included below).

/// <summary>

/// Runs soapUI test named testName

/// </summary>

private void RunSoapUItest(string soapProject, string testSuiteName, string testName)

{

const string fileName = "cmd.exe";

var soapProjectFileName = Path.GetFullPath(soapProject);

var arguments = string.Format("/C testrunner.bat -s\"{0}\" -c\"{1}\" \"{2}\" ", testSuiteName, testName, soapProjectFileName);

//for me this is C:\Program Files (x86)\SmartBear\SoapUI-4.6.4\bin

var soapHome = System.Configuration.ConfigurationManager.AppSettings["SoapUIHome"];

//start a process and hook up the in/output

var proces = new Process

{

StartInfo = new ProcessStartInfo

{

FileName = fileName,

Arguments = arguments,

WorkingDirectory = soapHome,

CreateNoWindow = true,

ErrorDialog = false,

RedirectStandardError = true,

RedirectStandardOutput = true,

UseShellExecute = false

},

EnableRaisingEvents = true

};

//pipe the output to console.writeline

proces.OutputDataReceived += (sender, args) => Console.WriteLine(args.Data);

//store the errors in a stringbuilder

var errorBuilder = new StringBuilder();

proces.ErrorDataReceived += (sender, args) =>

{

if (args != null && args.Data != null)

{

errorBuilder.AppendLine(args.Data);

}

};

proces.Start();

proces.BeginOutputReadLine();

proces.BeginErrorReadLine();

proces.WaitForExit();//wait for SoapUI to finish

//fail the test if anything fails

var errorMessage = errorBuilder.ToString();

if (!string.IsNullOrEmpty(errorMessage))

{

Assert.Fail("Test with name '{0}' failed. {1} {2}", testName, Environment.NewLine, errorMessage);

}

}

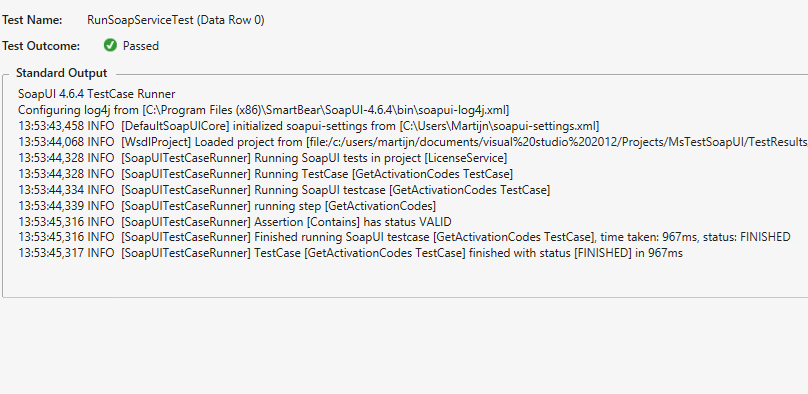

5. Running a test per SoapUI testCase

A typical SoapUI project includes multiple testcases. To make each testcase run as a separate test we can use something called a DataSource attribute for the test. This attribute cause the test to run for every 'Row' in the XML.

[DataSource("Microsoft.VisualStudio.TestTools.DataSource.XML", "TestData\\test-service.xml", "testCase", DataAccessMethod.Random)]

Since every testCase is a row the makes every testCase run separately. You have to make sure you get xml deployed (I used a deploymentItem for that).

/// <summary>

/// Test Soap Stuff

/// </summary>

[TestMethod]

[DeploymentItem(@"test-service.xml", "TestData")]

[DataSource("Microsoft.VisualStudio.TestTools.DataSource.XML", "TestData\\test-service.xml", "testCase", DataAccessMethod.Random)]

public void RunSoapServiceTest()

{

var testCaseName = TestContext.DataRow["name"].ToString();

RunSoapUItest(@"TestData\test-service.xml", "BasicHttpBinding_ILicenseService TestSuite", testCaseName);

}

I used the deploymentitems because I don't want absolute paths etc (I need the buildserver to handle this as well). Run the test and we get SoapUI output in Visual Studio / Build. Sweet :) To run this in a CI build you only need to install SoapUI on the buildserver and all should be well.

Here's the project:

MsTestSoapUI.zip (12.08 kb)

Happy Testing!